Serverless batched R scripts via Cloud Build

2021-03-19

Source:vignettes/cloudbuild.Rmd

cloudbuild.RmdCloud Build uses Docker containers to run everything. This means it can run almost any language/program or application including R. Having an easy way to create and trigger these builds from R means R can serve as a UI or gateway to any other program e.g. R can trigger a Cloud Build using gcloud to deploy Cloud Run applications.

The first 120 mins per day are free. See here for more priceinfo.

If you want to run scripts that can be triggered one time, batch style, and set them up to trigger on GitHub events or pub/sub, or schedule them using Cloud Scheduler then Cloud Build is suited to your use case.

If you would also like to have your R code react in realtime to events such as HTTP or sub/sub events, such as a website or API endpoint, consider Cloud Run.

The cloudbuild.yaml format

Cloud Build is centered around the cloudbuild.yaml format - you can use existing cloudbuild.yaml files or create your own in R using the cloudbuild.yaml helper functions.

An example cloudbuild.yaml is shown below - this outputs the versions of docker and echos “Hello Cloud Build” and calls an R function:

steps:

- name: 'gcr.io/cloud-builders/docker'

id: Docker Version

args: ["version"]

- name: 'alpine'

id: Hello Cloud Build

args: ["echo", "Hello Cloud Build"]

- name: 'rocker/r-base'

id: Hello R

args: ["R", "-e", "paste0('1 + 1 = ', 1+1)"]This cloudbuild.yaml file can be built directly via the cr_build() function. The build will by default trigger a website URL to open with the build logs.

b1 <- cr_build("cloudbuild.yaml")Or you can choose to wait in R for the build to finish, like below:

b2 <- cr_build("cloudbuild.yaml", launch_browser = FALSE)

b3 <- cr_build_wait(b2)

# Waiting for build to finish:

# |===||

# Build finished

# ==CloudBuildObject==

# buildId: c673143a-794d-4c69-8ad4-e777d068c066

# status: SUCCESS

# logUrl: https://console.cloud.google.com/gcr/builds/c673143a-794d-4c69-8ad4-e777d068c066?project=1080525199262

# ...You can export an existing build into a cloudbuild.yaml file, for instance to run in another project not using R.

cr_build_write(b3, file = "cloudbuild.yml")Constructing Cloud Build objects

There are several layers to creating Cloud Build objects in R:

-

cr_build()triggers the API with Cloud Build objects and cloudbuild.yaml files -

cr_build_yaml()lets you create the Cloud Build objects in R and can write to .yaml files. -

cr_build_make()creates Cloud Build R objects directly from .yaml files -

cr_buildstep()lets you create specific steps within the Cloud Build objects. There are helper template files with common tasks such ascr_buildstep_r()

Docker images to use in Cloud Build

Any utility that has a Docker image can be used within Cloud Build steps.

Official Google images for the gcloud, bq and gsutil are here: https://github.com/GoogleCloudPlatform/cloud-sdk-docker

Some community contributed Cloud Build images are listed here, including hugo, make, and tar, or you can configure your own Dockerfile and build what image you need yourself, perhaps by using a previous Cloud Build and cr_deploy_docker()

Cloud Build source

Cloud Builds sometimes need code or data to work on to be useful.

All cloudbuilds are launched in a serverless environment with a default directory /workspace/. The Source is copied into this workspace before the build steps execute, so steps can share state and files. Cloud Build sources are specified by the source argument.

A source can be a Cloud Source Repository (perhaps mirrored from GitHub) or a Cloud Storage bucket containing the code/data you want to operate on. An example of specifying both is below:

gcs_source <- cr_build_source(

StorageSource("gs://my-bucket", "my_code.tar.gz"))

repo_source <- cr_build_source(

RepoSource("github_markedmondson1234_googlecloudrunner",

branchName="master"))

build1 <- cr_build("cloudbuild.yaml", source = gcs_source)

build2 <- cr_build("cloudbuild.yaml", source = repo_source)cr_build_upload_gcs() is a helper function for automating creation of a Google Cloud Storage source - this uses googleCloudStorageR to tar and upload your source code locally to your bucket, making it available to your build.

This returns a Source object that can be used in build functions:

storage <- cr_build_upload_gcs("my_folder")

cr_build(my_yaml, source = storage)By default this will place your local folder’s contents in the /workspace/deploy/ folder. For buildsteps to access those files you may want to add dir="deploy" to them so they will have their working directory start from there.

Cloud Build macros

Cloud Builds can use reserved macros and variables to help with deployments in a continuous development situation. For instance, files can be named according to the Git branch they are committed from. These are listed in ?Build and reproduced below:

- $PROJECT_ID: the project ID of the build.

- $BUILD_ID: the autogenerated ID of the build.

- $REPO_NAME: the source repository name specified by RepoSource.

- $BRANCH_NAME: the branch name specified by RepoSource.

- $TAG_NAME: the tag name specified by RepoSource.

- $REVISION_ID or $COMMIT_SHA: the commit SHA specified by RepoSource or resolved from the specified branch or tag.

- $SHORT_SHA: first 7 characters of $REVISION_ID or $COMMIT_SHA.

Custom macros can also be configured, starting with _$ e.g. $_MY_CUSTOM_MACRO

Creating cloudbuild.yml build steps

Instead of using separate cloudbuild.yml files, you can also choose to make your own cloudbuild.yaml files in R via cr_build_yaml() and cr_buildstep()

Lets say you don’t want to write a cloudbuild.yaml file manually - instead you can create all the features of the yaml files in R. Refer to the cloudbuild.yml config spec on what is expected in the files or functions.

An example below recreates a simple cloudbuild.yml file. If you print it to console it will output what the build would look like if it was in a yaml file:

cr_build_yaml(steps = cr_buildstep( "gcloud","version"))

#==cloudRunnerYaml==

#steps:

#- name: gcr.io/cloud-builders/gcloud

# args: versionYou can write back out into your own cloudbuild.yml

my_yaml <- cr_build_yaml(steps = cr_buildstep( "gcloud","version"))

cr_build_write(my_yaml, file = "cloudbuild.yaml")And also edit or extract steps from existing cloudbuild.yml files via cr_buildstep_edit() and cr_buildstep_extract().

This allows you to programmatically create cloudbuild yaml files for other languages and triggers. See more at this article on creating custom build steps with your own Docker images.

Pre-made Build Step templates

Using the above build step editing functions, some helpful build steps you can use in your own cloudbuild steps have been included in the package.

-

cr_buildstep_gcloud()- an optimised docker forgcloud,bq,gsutilorkubectlcommands -

cr_buildstep_bash()- for including bash scripts -

cr_buildstep_docker()- for building and pushing Docker images -

cr_buildstep_secret()- storing secrets in the cloud and decrypting them -

cr_buildstep_decrypt()- for using Google Key management store to decrypt auth files -

cr_buildstep_git()- for setting up and running git commands -

cr_buildstep_mailgun()- send an email with Mailgun.org -

cr_buildstep_nginx_setup()- setup hosting HTML files with nginx on Cloud Run -

cr_buildstep_pkgdown()- for setting up pkgdown documentation of an R package -

cr_buildstep_r()- for running R code -

cr_buildstep_slack()- send a Slack webhook message

If you have any requests for others, please raise an issue on GitHub.

Combine buildsteps with c() e.g.

cr_build_yaml(

steps = c(

cr_buildstep("ubuntu", "echo hello"),

cr_buildstep_gcloud("gcloud","version"),

cr_buildstep_docker("my-image", tag="dev"),

cr_buildstep_secret("my_secret","auth.json"),

cr_buildstep_r("sessionInfo()")),

images = "gcr.io/my-project/my-image")Build Artifacts

You may have some useful files or data after your buildsteps that you want to use later. You can specify these files as artifacts that will be uploaded to a Google Cloud Storage bucket after the build finishes. A helper function cr_build_artifacts() will take your build object and download the files to your local directory via googleCloudStorageR

r <- "write.csv(mtcars,file = 'artifact.csv')"

ba <- cr_build_yaml(

steps = cr_buildstep_r(r),

artifacts = cr_build_yaml_artifact('artifact.csv')

)

build <- cr_build(ba)

built <- cr_build_wait(build)

cr_build_artifacts(built)

# 2019-12-22 12:36:10 -- Saved artifact.csv to artifact.csv (1.7 Kb)

read.csv("artifact.csv")

# X mpg cyl disp hp drat wt qsec vs am gear carb

#1 Mazda RX4 21.0 6 160.0 110 3.90 2.620 16.46 0 1 4 4

#2 Mazda RX4 Wag 21.0 6 160.0 110 3.90 2.875 17.02 0 1 4 4

#3 Datsun 710 22.8 4 108.0 93 3.85 2.320 18.61 1 1 4 1

#4 Hornet 4 Drive 21.4 6 258.0 110 3.08 3.215 19.44 1 0 3 1

# ... etc ...Build Logs

You can view the logs of the build locally in R using cr_build_logs()

Pass in the built logs via cr_build_wait() or cr_build_status()

s_yaml <- cr_build_yaml(steps = cr_buildstep( "gcloud","version"))

build <- cr_build_make(s_yaml)

built <- cr_build(build)

the_build <- cr_build_wait(built)

cr_build_logs(the_build)

# [1] "starting build \"6ce86e05-b0b1-4070-a849-05ec9020fd3b\""

# [2] ""

# [3] "FETCHSOURCE"

# [4] "BUILD"

# [5] "Already have image (with digest): gcr.io/cloud-builders/gcloud"

# [6] "Google Cloud SDK 325.0.0"

# [7] "alpha 2021.01.22"

# [8] "app-engine-go 1.9.71"

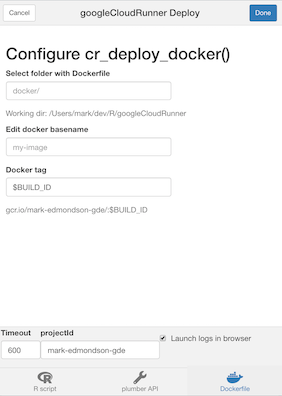

# ...RStudio Gadget - build Docker

If you are using RStudio, installing the library will enable an RStudio Addin that can be called after you have setup the library as per the setup page.

It includes a Shiny gadget that you can call via the Addin menu in RStudio, via googleCloudRunner::cr_deploy_gadget() or assigned to a hotkey (I use CTRL+SHIFT+D).

This sets up a Shiny UI to help smooth out deployments as pictured:

R buildsteps

Focusing on one buildstep in particular, since this is an R package - you can send in R code into a build trigger using cr_buildstep_r().

It accepts both inline R code or a file location. This R code is executed in the R environment as specified in argument name - they default to the R images provided by Rocker rocker-project.org.

If you want to build your own images (in perhaps another Cloud Build using cr_deploy_docker()) you can use your own R images with custom R packages and resources.

Some useful R images have been made you could use or refer to their Dockerfiles for:

-

gcr.io/gcer-public/packagetools- installs: devtools covr rhub pkgdown goodpractice httr plumber rmarkdown -

gcr.io/gcer-public/render_rmd- installs: pkgdown rmarkdown flexdashboard blogdown bookdown -

gcr.io/gcer-public/googlecloudrunner- installs: containerit, googleCloudStorageR, plumber, googleCloudRunner

The R code can be created within the Build at build time, or you can refer to an existing R script within the Source.

# create an R buildstep inline

cr_buildstep_r(c("paste('1+1=', 1+1)", "sessionInfo()"))

# create an R buildstep from a local file

cr_buildstep_r("my-r-file.R")

# create an R buildstep from a file within the source of the Build

cr_buildstep_r("inst/schedule/schedule.R", r_source = "runtime")

# use a different Rocker image e.g. rocker/verse

cr_buildstep_r(c("library(dplyr)", "mtcars %>% select(mpg)", "sessionInfo"),

name = "verse")

# use your own R image with custom R

my_r <- c("devtools::install()", "pkgdown::build_site()")

br <- cr_buildstep_r(my_r, name= "gcr.io/gcer-public/packagetools:latest")

# send it for building

cr_build(cr_build_yaml(steps=br))Build Triggers

Once you have build steps and possibly a source created, you can either set these up to run on a schedule via cr_schedule() or you can use triggers that will run upon certain events.

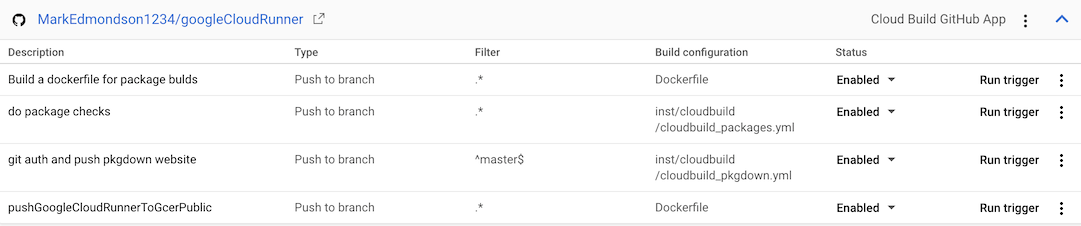

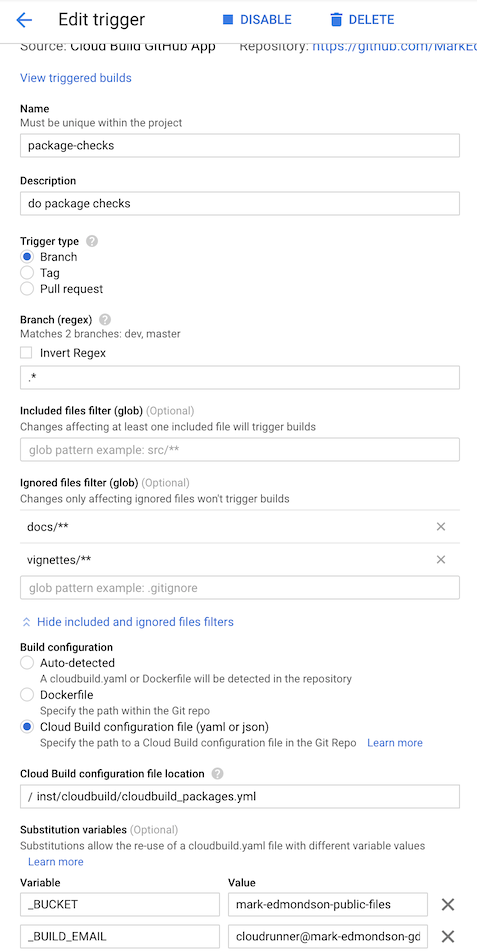

Setting up Build Triggers in the Web UI

The quickest way to get going is to use the web UI for Build Triggers (https://console.cloud.google.com/cloud-build/triggers)

- Link your repo (GitHub, Bitbucket or Cloud Repositories) to Google

- Create your Cloud Build using

cr_build_yaml()etc. - Write out to a

cloudbuild.ymlfile in your repository (by default the root directory is checked) - Setup the Build Trigger in the Web UI (

https://console.cloud.google.com/cloud-build/triggers) - Make a git commit and check the Cloud Build has run in the history (

https://console.cloud.google.com/cloud-build/builds) - Modify the

cloudbuild.ymlfile as you need, recommit to trigger a new build.

Learn how to set them up at this Google article on creating and managing build triggers.

From there you get the option of connecting a repository either by mirroring it from GitHub/Bitbucket or using Cloud Source Repositories.

You can setup either Docker builds by just providing the location of the Dockerfile, or have more control by providing a cloudbuild.yml - by default these are looked for in the root directory.

Here are some example for this package’s GitHub repo:

- The “do package checks” performs authenticated tests against the package

- The “git auth and push pkgdown website” rebuilds the website each commit to master branch.

- “Build a dockerfile for package builds” builds the

gcr.io/gcer-public/packagetoolsimage - “pushGoogleCloudRunnerToGcerPublic” builds the

gcr.io/gcer-public/googlecloudrunnerimage

Build Triggers via code

Build Triggers can be made via R code using the cr_buildtrigger() function.

Build Triggers include GitHub commits and pull requests.

The example below shows how to set up some of the builds above:

cloudbuild <- system.file("cloudbuild/cloudbuild.yaml",

package = "googleCloudRunner")

bb <- cr_build_make(cloudbuild, projectId = "test-project")

github <- cr_buildtrigger_repo("MarkEdmondson1234/googleCloudRunner", branch = "master")

cr_buildtrigger(bb, name = "trig1", trigger = github)The Build macros can also be configured by passing in a named list:

# creates a trigger with named substitutions

ss <- list(`$_MYVAR` = "TEST1", `$_GITHUB` = "MarkEdmondson1234/googleCloudRunner")

cr_buildtrigger("trig2", trigger = github, build = bb, substitutions = ss)The above examples will create the build step inline within the trigger, but you can also use existing cloudbuild.yaml files by specifying where in the repository the build file will be:

# create a trigger that will build from the file in the repo

cr_buildtrigger("cloudbuild.yaml", name = "trig3", trigger = github)This way you can commit changes to the cloudbuild.yaml file, and they will be reflected in the Build Trigger.

Connecting to GitHub

You can connect via the Source Repository mirroring service or via the Google Cloud Build GitHub app - see the git setup page for more details.