GA imports into BigQuery via Polygot Cloud Builds - integrating R code with other languages

2022-03-26

Source:vignettes/usecase-polygot-ga-imports-to-bigquery.Rmd

usecase-polygot-ga-imports-to-bigquery.RmdSince Docker containers can hold any language within them, they offer a universal UI to combine languages. This offers opportunities to extend other languages with R features, and give other languages access to R code without needing to know R.

An example below uses:

-

gcloud- Google’s Cloud command line tool to access Google’s key management store and download an authentication file, and pushes to BigQuery -

gago- A Go package for fast downloads of Google Analytics data -

R- R code to create an Rmd file that will hold interactive forecasts of the Google Analytics data viacr_buildstep_r() -

nginx- serve up the Rmd files rendered into HTML and hosted on Cloud Run viacr_deploy_html()

And will perform downloading unsampled data from Google Analytics, creating a statistical report of the data and then uploading the raw data to BigQuery for further analysis.

library(googleCloudRunner)

polygot <- cr_build_yaml(

steps = c(

cr_buildstep(

id = "download encrypted auth file",

name = "gsutil",

args = c("cp",

"gs://marks-bucket-of-stuff/auth.json.enc",

"/workspace/auth.json.enc"),

),

cr_buildstep_decrypt(

id = "decrypt file",

cipher = "/workspace/auth.json.enc",

plain = "/workspace/auth.json",

keyring = "my-keyring",

key = "ga_auth"

),

cr_buildstep(

id = "download google analytics",

name = "gcr.io/gcer-public/gago:master",

env = c("GAGO_AUTH=/workspace/auth.json"),

args = c("reports",

"--view=81416156",

"--dims=ga:date,ga:medium",

"--mets=ga:sessions",

"--start=2014-01-01",

"--end=2019-11-30",

"--antisample",

"--max=-1",

"-o=google_analytics.csv"),

dir = "build"

),

cr_buildstep(

id = "download Rmd template",

name = "gsutil",

args = c("cp",

"gs://mark-edmondson-public-read/polygot.Rmd",

"/workspace/build/polygot.Rmd")

),

cr_buildstep_r(

id="render rmd",

r = "lapply(list.files('.', pattern = '.Rmd', full.names = TRUE),

rmarkdown::render, output_format = 'html_document')",

name = "gcr.io/gcer-public/packagetools:latest",

dir = "build"

),

cr_buildstep_bash(

id = "setup nginx",

bash_script = system.file("docker", "nginx", "setup.bash",

package = "googleCloudRunner"),

dir = "build"

),

cr_buildstep_docker(

# change to your own container registry

image = "gcr.io/gcer-public/polygot_demo",

tag = "latest",

dir = "build"

),

cr_buildstep_run(

name = "polygot-demo",

image = "gcr.io/gcer-public/polygot_demo",

concurrency = 80),

cr_buildstep(

id = "load BigQuery",

name = "gcr.io/google.com/cloudsdktool/cloud-sdk:alpine",

entrypoint = "bq",

args = c("--location=EU",

"load",

"--autodetect",

"--source_format=CSV",

"test_EU.polygot",

"google_analytics.csv"

),

dir = "build"

)

)

)

# build the polygot cloud build steps

build <- cr_build(polygot)

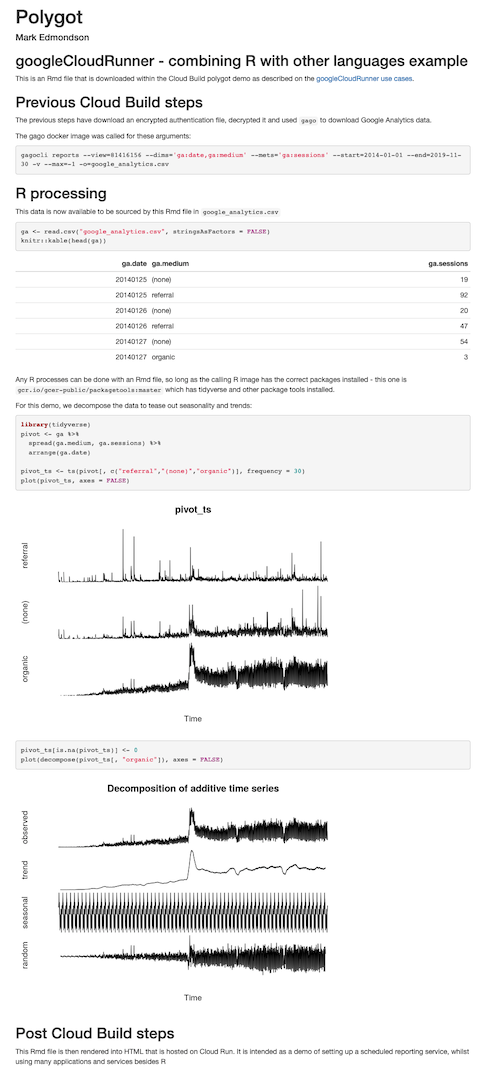

built <- cr_build_wait(build)An example of the demo output is on this Cloud Run instance URL:

https://polygot-demo-ewjogewawq-ew.a.run.app/polygot.html

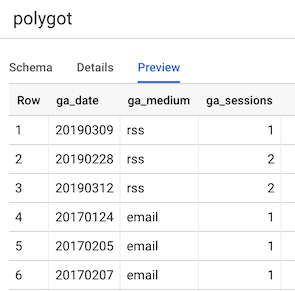

It also uploads the data to a BigQuery table:

This constructed cloud build can also be used outside of R, by

writing out the Cloud Build file via cr_build_write()

# write out to cloudbuild.yaml for other languages

cr_build_write(polygot)

# 2019-12-28 19:15:50> Writing to cloudbuild.yamlThis can then be scheduled as described in Cloud Scheduler section on scheduled cloud builds.

schedule_me <- cr_schedule_http(built)

cr_schedule("polygot-example", "15 8 * * *", httpTarget = schedule_me)An example of the cloudbuild.yaml is on GitHub here.